Access IBM Cloud Storage from Jupyter notebook Python

Cloud storage helps you to store data and files in an off-site location and can be accessed either via the public internet or by a dedicated private network. The data you send off-site for storage is a third-party cloud provider ‘s responsibility. The vendor owns, secures, operates and supports the servers and the relevant services and guarantees that you have access to the data anytime you need it.

How to access files from IBM Cloud Storage?

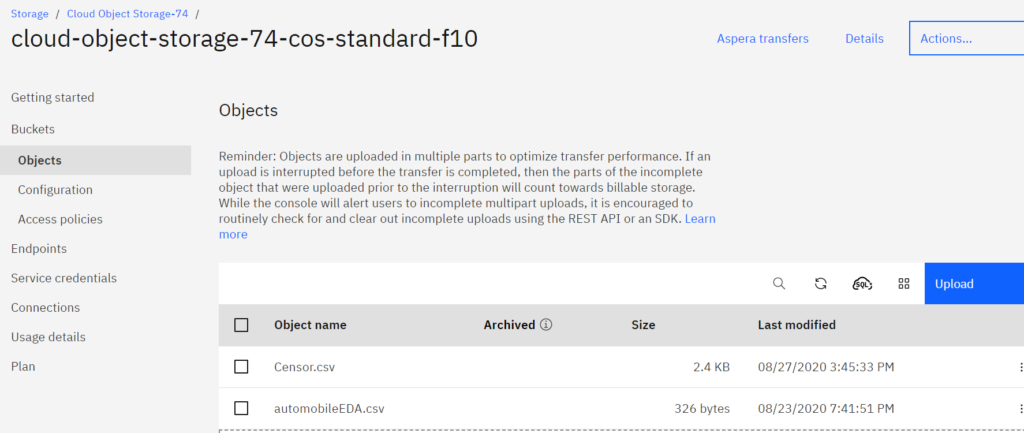

Assuming you have a account in IBM Cloud Storage , first step is you have to create a bucket in Cloud storage and within it you can upload files or folder.

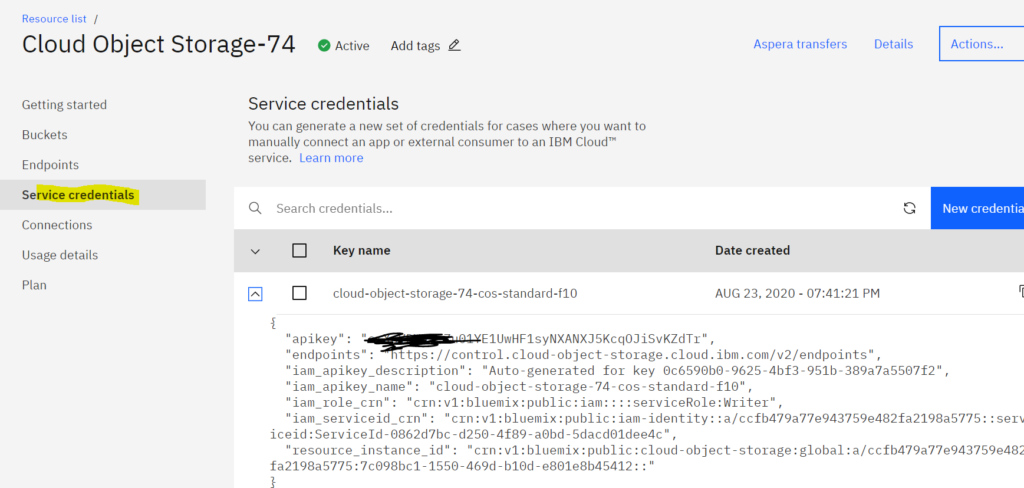

Go to Service credentials and copy API details

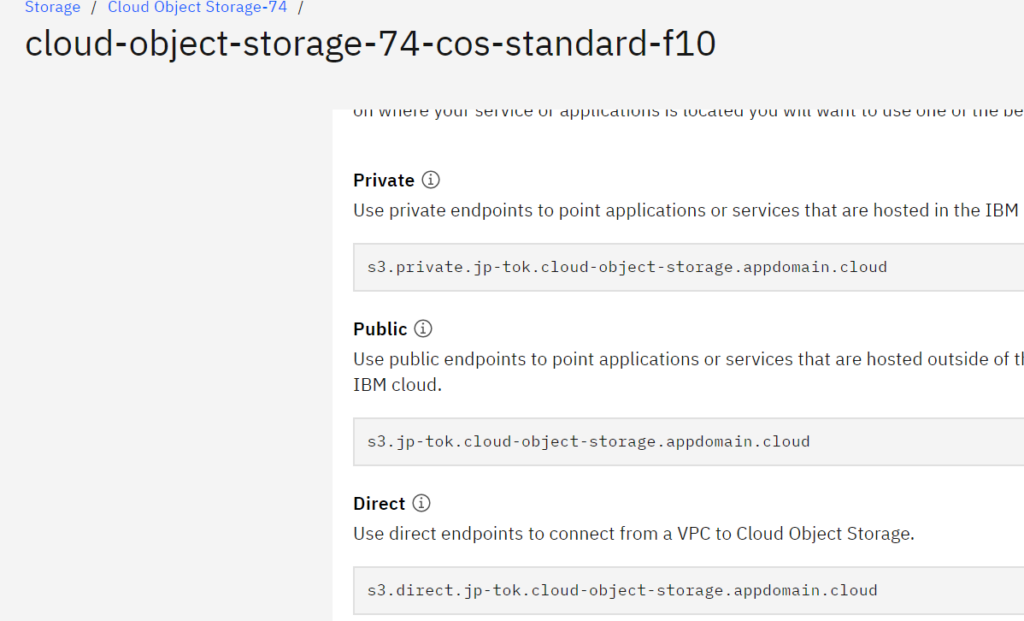

For endpoint_url you have to select configuration under bucket and copy the public URL

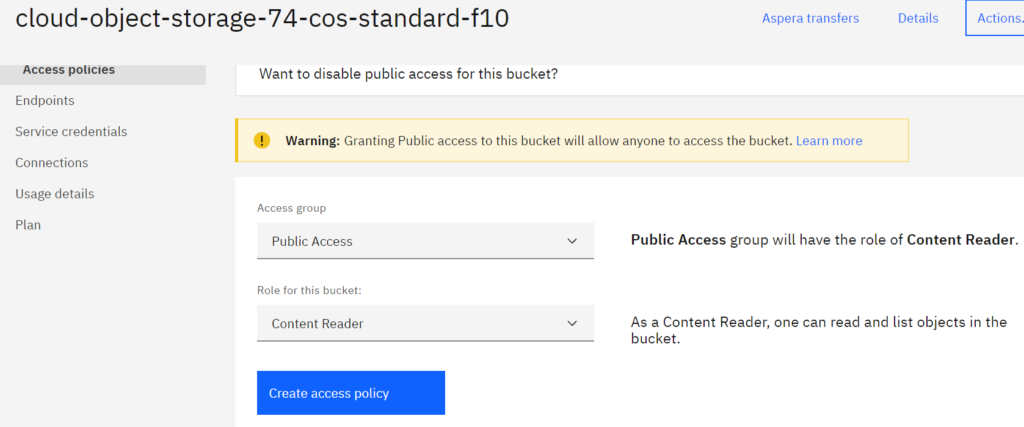

After this you have to grant public access to read the content of your bucket

Execute below code in Python Jupyter notebook and you will be able to view the data of file you have uploaded in IBM Cloud Storage.

from ibm_botocore.client import Config

import ibm_boto3

import pandas as pd

import io

cos_credentials={

"apikey": "*********************",

"endpoints": "https://control.cloud-object-storage.cloud.ibm.com/v2/endpoints",

"iam_apikey_description": "Auto-generated for key 0c6590b0-9625-4bf3-951b-389a7a5507f2",

"iam_apikey_name": "cloud-object-storage-74-cos-standard-f10",

"iam_role_crn": "crn:v1:bluemix:public:iam::::serviceRole:Writer",

"iam_serviceid_crn": "crn:v1:bluemix:public:iam-identity::a/ccfb479a77e943759e482fa2198a5775::serviceid:ServiceId-0862d7bc-d250-4f89-a0bd-5dacd01dee4c",

"resource_instance_id": "crn:v1:bluemix:public:cloud-object-storage:global:a/ccfb479a77e943759e482fa2198a5775:7c098bc1-1550-469d-b10d-e801e8b45412::",

}

auth_endpoint = 'https://iam.cloud.ibm.com/oidc/token'

service_endpoint = 'https://s3.jp-tok.cloud-object-storage.appdomain.cloud'

cos = ibm_boto3.client('s3',

ibm_api_key_id=cos_credentials['apikey'],

ibm_service_instance_id=cos_credentials['resource_instance_id'],

ibm_auth_endpoint=auth_endpoint,

config=Config(signature_version='oauth'),

endpoint_url=service_endpoint)

df=cos.get_object(Bucket='cloud-object-storage-74-cos-standard-f10', Key='Censor.csv')['Body']

body=pd.read_csv(df)

body

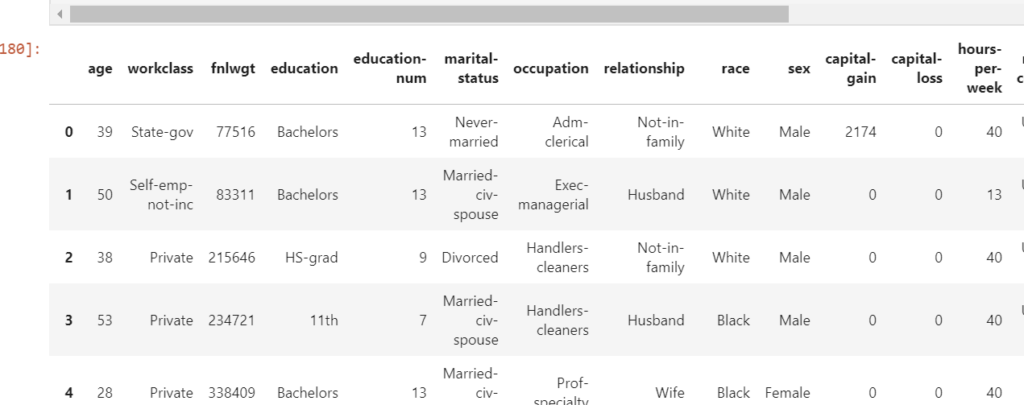

Output: